We’ve been learning for many years how to run Linux for databases, but over time we realized that many of our lessons learned apply to many other server workloads. Generally, server process will have to interact with network clients, access memory, do some storage operations and do some processing work – all under supervision of the kernel.

Unfortunately, from what I learned, there’re various problems in pretty much every area of server operation. By keeping the operational knowledge in narrow camps we did not help others. Finding out about these problems requires quite intimate understanding of how things work and slightly more than beginner kernel knowledge.

Many different choices could be made by doing empiric tests, sometimes with outcomes that guide or misguide direction for many years. In our work we try to understand the reasons behind differences that we observe in random poking at a problem.

In order to qualify and quantify operational properties from our server systems we have to understand what we should expect from them. If we build a user-facing service where we expect sub-millisecond response times of individual parts of the system, great performance from all of the components is needed. If we want to build high-efficiency archive and optimize data access patterns, any non-optimized behavior will really stand out. High throughput system should not operate at low throughput, etc.

In this post I’ll quickly glance over some areas in memory management that we found problematic in our operations.

Whenever you want to duplicate a string or send a packet over the network, that has to go via allocators (e.g. some flavor of malloc in userland or SLUB in kernel). Over many years state of the art in user-land has evolved to support all sorts of properties better – memory efficiency, concurrency, performance, etc – and some of added features were there to avoid dealing with the kernel too much.

Modern allocators like jemalloc have per-thread caches, as well as multiple memory arenas that can be managed concurrently. In some cases the easiest way to make kernel memory management easier is to avoid it as much as possible (jemalloc can be much greedy and not give memory back to the kernel via lg_dirty_mult setting).

Just hinting the kernel that you don’t care about page contents gets them immediately taken away from you. Once you want to take it back, even if nobody else used the page, kernel will have to clean it for you, shuffle it around multiple lists, etc. Although that is considerable overhead, it far from worst what can happen.

Your page can be given to someone else – for example, file system cache, some other process or kernel’s own needs like network stack. When you want your page back, you can’t take it from all these allocations that easily, and your page has to come from free memory pool.

Linux free memory pool is something that probably works better on desktops and batch processing and not low latency services. It is governed by vm.min_free_kbytes setting, which has very scarce documentation and even more scarce resource allocation – on 1GB machine you can find yourself with 5% of your memory kept free, but then there’re caps on it at 64MB when autosizing it on large machines.

Although it may seem that all this free memory is a waste, one has to look at how kernel reclaims memory. This limit sets up how much to clean up, but not at when to trigger background reclamation – that is done at only 25% of free memory limit – so memory pool that can be used for instant memory allocation is at measly 16MB – just two userland stacks.

Once you exhaust the free memory limit kernel has to go into “direct reclaim” mode – it will stall your program and try to get memory from somewhere (thanks, Johannes, for vmscan/mm_vmscan_direct_reclaim_begin hint). If you’re lucky, it will drop some file system pages, if you’re less lucky it will start swapping, putting pressure on all sorts of other kernel caches, possibly even shrinking TCP windows and what not. Understanding what kernel will do in the direct claim has never been easy, and I’ve observed cases of systems going into multi-second allocation stalls where nothing seems to work and fancy distributed systems failover can declare node dead.

Obviously, raising free memory reserves helps a lot, and on various servers we maintain 1GB free memory pool just because low watermark is too low otherwise. Johannes Weiner from our kernel team has proposed tunable change in behaviors there. That still requires teams to understand implications of free memory needs and not run with defaults.

Addressing this issue gets servers into much healthier states, but doesn’t always help with memory allocation stalls – there’s another class of issues that was being addressed lately.

I wrote about it before – kernel has all sorts of nasty behaviors whenever it can’t allocate memory, and certain memory allocation attempts are much more aggressive – atomic contiguous allocations of memory end up scanning (and evicting) many pages because it can’t find readily available contiguous segments of free memory.

These behaviors can lead to unpredictable chain of events – sometimes TCP packets arrive and are forced to wait until some I/O gets done as memory compaction ended up stealing dirty inodes or something like that. One has to know memory subsystem much more than I do in order to build beautiful reproducible test-cases.

This area can be addressed in multiple ways – one can soften allocation needs of various processes on the system (do iptables really need 128k allocation for an arriving segment to log it via NFLOG to some user land collection process?), also it is possible to tweak kernel background threads to have less fragmented memory (like a cronjob I deployed many years ago) or of course, getting the memory reclamation order into decent shape instead of treating it as a black box that “should work for you unless you do something wrong” (like using TCP stack).

Some of our quick changes (like net: don’t wait for order-3 page allocation) were addressing this case by case basis, but it was amazing to see that this kind of optimization was pulled in to cover many many more allocations via wide-reaching change (mm/slub: don’t wait for high-order page allocation). From my experience, this addresses huge class of reliability and stability issues in Linux environments and makes system behavior way more adaptive and fluid.

There are still many gray areas in Linux kernel and desktop direction may not always help addressing them. I have test-cases where kernel is only able to reclaim memory at ~100MB/s (orders of magnitudes away from RAM performance) – and what these test cases usually have in common is “this would happen on a server but never on a desktop”. For example if your process writes a [transaction] log file and you forget to remove it from cache yourself, Linux will thrash on the inode mutex quite a bit.

There’re various zone reclaim contract violations that are easy to trigger with simple test cases – those test cases definitely expose edge behaviors, but many system reliability issues we investigate in our team are edge behaviors.

In database world we exasperate these behaviors when we bypass various kernel subsystems – memory is pooled inside the process, files are cached inside the process, threads are pooled inside the process, network connections are pooled by clients, etc. Kernel ends up being so dumb that it breaks on a simple problems like ‘find /proc’ (directory entry cache blows up courtesy of /proc/X/task/Y/fd/Z explosion ).

Although cgroups and other methods allow to constrain some sets of resources within various process groups, it doesn’t help when a shared kernel subsystem goes into an overdrive.

There’re also various problems with memory accounting – although kernel may report you quickly how many dirty file system pages it has, it doesn’t give equal opportunities to network stack. Figuring out how much of memory is in socket buffers (and how full these buffers are) is a non-trivial operation, and on many of our systems we will have much more memory allocated to network stack than to many other categories in /proc/meminfo. I’ve written scripts that pull socket data from netlink, try to guess what is the real memory allocation (it is not straightforward math) to produce a very approximate result.

Lack of proper memory attribution and accounting has been a regular issue – in 3.14 a new metric (MemAvailable) has been added, which sums up part of cache and reclaimable slab, but if you pay more attention to it, there’s lots of guessing whether your cache or slab is actually reclaimable (or what the costs are).

Currently when we want to understand what is cached, we have to walk the file system, map the files and use mincore() to get basic idea of our cache composition and age – and only then we can tell that it is safe to reclaim pages from memory. Quite a while ago I have written a piece of software that removes files from cache (now vmtouch does the same).

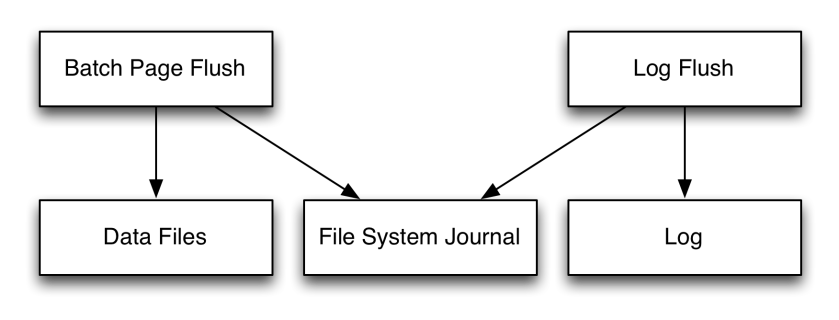

Nowadays on some of our systems we have much more complicated cache management. Pretty much every buffered write that we do is followed by asynchronous cache purge later so that we are not at the mercy of the kernel and its expensive behaviors.

So, you either have to get kernel entirely out of your way and manage everything yourself, or blindly trust whatever is going on and losing efficiency on the way. There must be a middle ground somewhere, hopefully, and from time to time we move in the right direction.

In desktop world you’re not supposed to run your system 100% loaded or look for those 5% optimizations and 0.01% probability stalls. In massively interconnected service fabrics we have to care about these areas and address them all the time, and as long as these kinds of optimizations reach wider set of systems, everybody wins.

TL;DR: upgrade your kernels and bump vm.min_free_kbytes :-)